Introduction

HeKatE is about researching and effectively applying selected knowledge engineering methods in practical software engineering.

We aim at improving:

design methods and process,

automated implementation,

automated, possibly formal analysis and verification.

We aim at providing methods and tools in the areas of:

knowledge representation, for the visual design,

knowledge translation, for the automated implementation,

knowledge analysis, for the formal verification.

Motivation

For an extended version see gjn2007flairs-hekate

Knowledge-based systems (KBS) are an important class of intelligent systems originating from the field of Artificial Intelligence.

In AI, rules are probably the most popular choice for building knowledge-based systems, that is the so-called rule-based expert systems.

Building real-life KBS is a complex task.

Since their architecture is fundamentally different from classic software, typical Software Engineering approaches cannot be applied efficiently.

Software Engineering (SE) does not contribute much concepts in Knowledge Engineering.

However, it does provide some important tools and techniques for it.

On the other hand some important Knowledge Engineering conceptual achievements and methodologies can be successfully transferred and applied in the domain of Software Engineering.

What makes KBS distinctive is the separation of knowledge storage (the knowledge base) from the knowledge processing facilities.

In order to store knowledge, KBS use various knowledge representation methods, which are declarative in nature.

The knowledge engineering process, should capture the expert knowledge and represent it in a way that is suitable for processing.

The actual structure of a KBS does not need to be system specific it should not ,,mimic'' or model the structure of the real-world problem.

The level at which KE should operate is often referred to as the knowledge level.

Historically, there has always been a strong feedback between SE and computer programming tools.

At the same time, these tools have been strongly determined by the actual architecture of computers themselves.

For a number of years there has been a clear trend for the software engineering to become as implementation-independent as possible.

It could be said, that these days software engineering becomes more knowledge-based, while knowledge engineering is more about software engineering.

This opens multiple opportunities for both approaches to improve and benefit.

The Software Engineering is derived as a set of paradigms, procedures, specifications and tools from pure programming.

It is heavily tainted with the way how programs work which is the sequential approach, based on the Turing Machine concept.

Historically, when the modelled systems became more complex, SE became more and more declarative, in order to model the system in a more comprehensive way.

It made the design stage independent of programming languages which resulted in number of approaches; the best example is the MDA approach.

So, while programming itself remains mostly sequential, designing becomes more declarative.

It could be summarized, that constant sources of errors in software engineering are:

The Semantic Gap between existing design methods, which are becoming more and more declarative, and implementation tools that remain sequential/procedural.

Evaluation problems due to large differences in semantics of design methods and lack of formal knowledge model. They appear at many stages of the SE process, including not just the correctness of the final software, but also validity of the design model, and the transformation from the model to the implementation.

The so-called Analysis Specification Gap, which is the difficulty with proper formulation of requirements, and transformation of the requirements into an effective design, and then implementation.

The so-called Separation Problem, which is the lack of separation between Core Software Logic, software interfaces and presentation layers.

Concepts

Some of the main concepts behind HeKatE are:

providing an integrated design and implementation process, thus

closing the semantic gap, and

automating the implementation, providing

an executable solution, that includes

an on-line formal analysis of the design, during the design.

HeKatE uses methods and tools in the areas of:

knowledge representation, for the visual design,

knowledge translation, for the automated implementation,

knowledge analysis, for the formal verification.

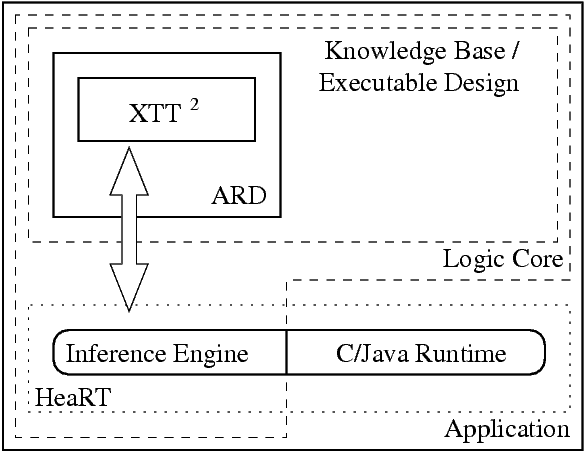

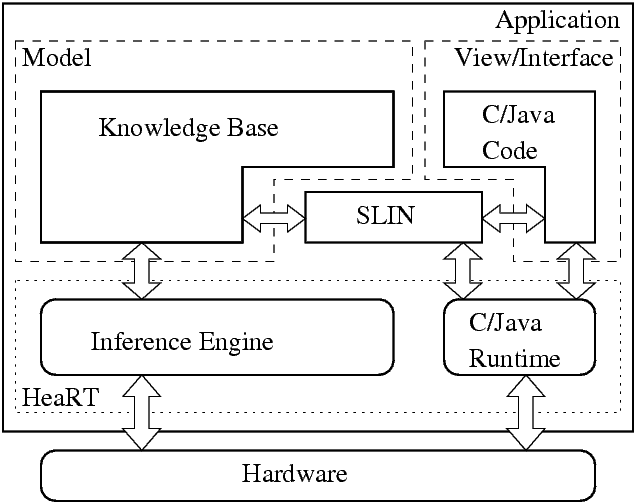

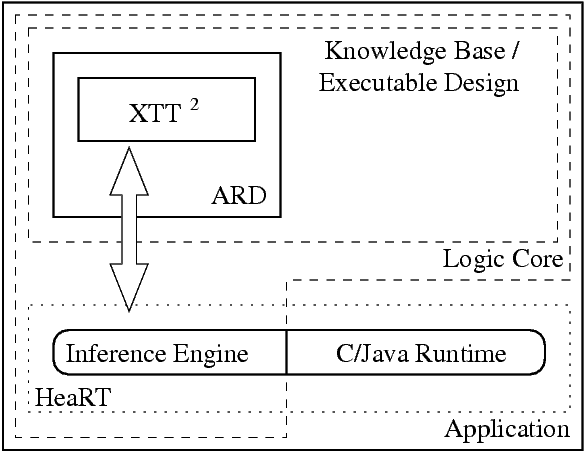

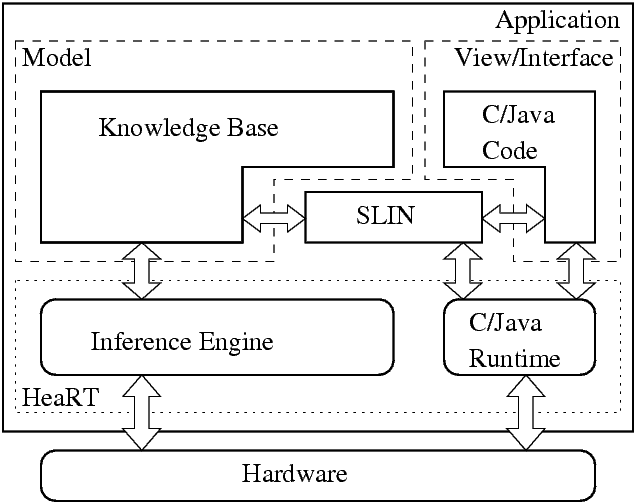

A principal idea in this approach is to model, represent, and store the logic behind the software (sometimes referred to as business logic) using advanced knowledge representation methods taken from KE.

The logic is then encoded with use of a declarative representation.

The logic core would be then embedded into a business application, or embedded control system.

The remaining parts of the business or control applications, such as interfaces, or presentation aspects, would be developed with a classic object-oriented or procedural programming languages such as Java or C.

The HeKatE project should eventually provide a coherent runtime environment for running the combined Prolog and Java/C code.

From the implementation point of view HeKatE is based on the idea of multiparadigm programming.

The target application combines the logic core implemented in Prolog, with object-oriented interfaces in Java, or procedural in ANSI C.

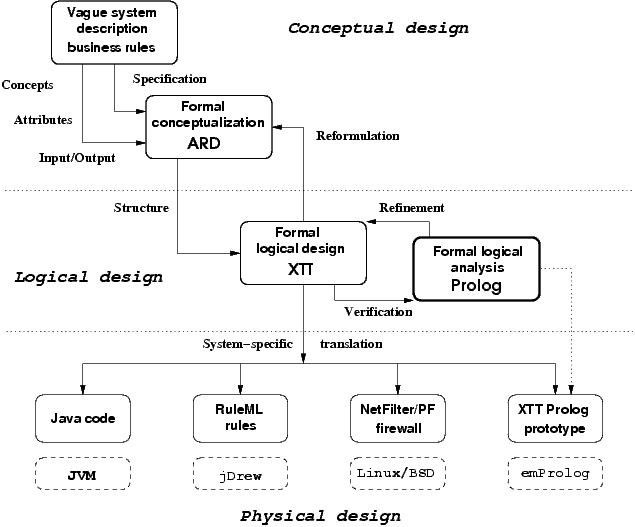

The Semantic Gap problem is addressed by providing declarative design methods for the business logic.

There is no translation from the formal, declarative design into the implementation language.

The knowledge base is specified and encoded in the Prolog language.

The logical design which specifies the knowledge base becomes an application executable by a runtime environment, combining an inference engine and classic language runtime (e.g. a JVM).

Methods

Currently, the development is focused on the:

ARD+

For a more complete description of ARD+ see

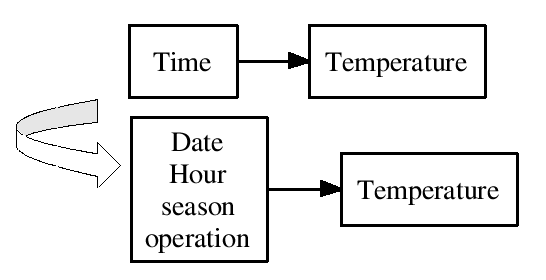

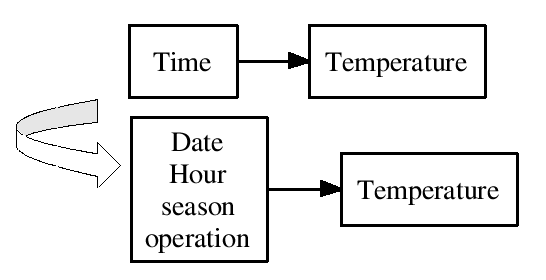

The ARD (Attribute Relationships Diagrams) method aims at capturing relations between attributes in terms of Attributive Logic.

Attributes denote certain system property.

A property is described by one or more attributes.

ARD captures functional dependencies among these properties.

A simple property is a property described by a single attribute, while a complex property is described by multiple attributes.

It is indicated that particular system property depends functionally on other properties.

Such dependencies form a directed graph with nodes being properties.

There are two kinds of attributes adapted by ARD: Conceptual Attributes and Physical Attributes.

A conceptual attribute is an attribute describing some general, abstract aspect of the system to be specified and refined.

Conceptual attribute names are capitalized, e.g.: WaterLevel.

Conceptual attributes are being finalized during the design process, into, possibly multiple, physical attributes.

A physical attribute is an attribute describing a well-defined, atomic aspect of the system.

Names of physical attributes are not capitalized, e.g. theWaterLevelInTank1.

Physical attributes cannot be finalized, they are present in the final rules capturing knowledge about the system.

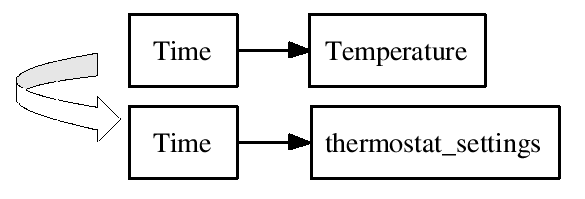

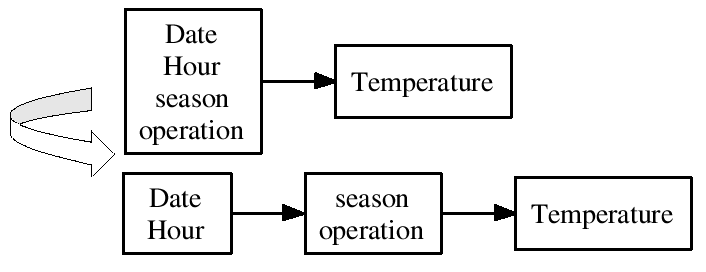

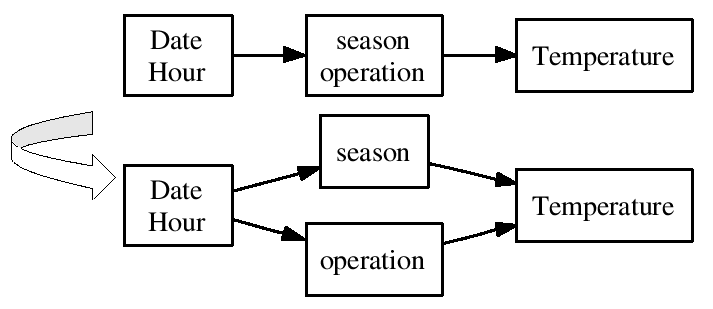

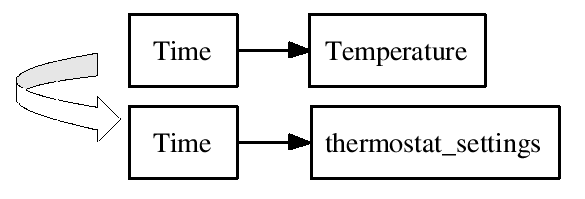

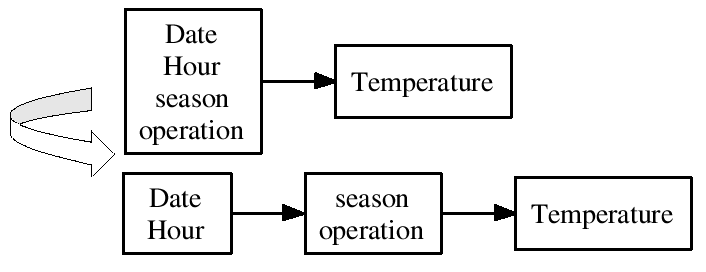

There are two transformations allowed during the ARD+ design.

These are: finalization and split.

Finalization transforms a simple property described by a conceptual attribute into a property described by one or more conceptual or physical attributes.

It introduces a more specific knowledge about the given property.

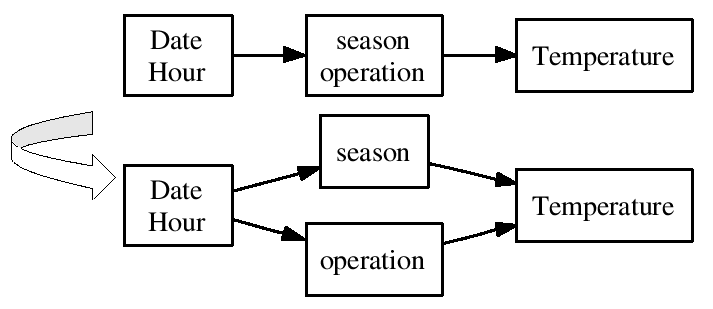

Split transforms a complex property into a number of properties and defines functional dependencies among them.

During the design process, upon splitting and finalization, the ARD model grows.

This growth is expressed by consecutive diagram levels, making the design more and more specific.

This constitutes the hierarchical model.

Consecutive levels make a hierarchy of more and more detailed diagrams describing the designed system.

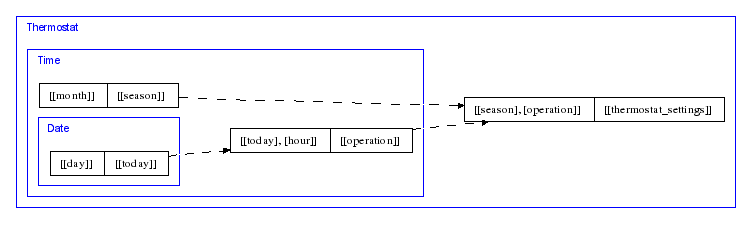

The implementation of such hierarchical model is provided through storing the lowest available, most detailed diagram level at any time, and additional information needed to recreate all of the higher levels, the so-called Transformation Process History (TPH).

It captures information about changes made to properties at consecutive diagram levels.

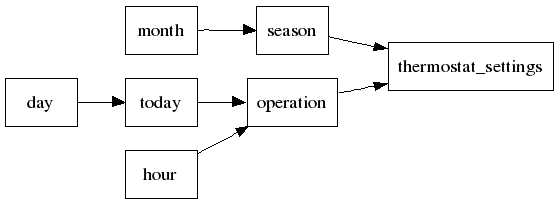

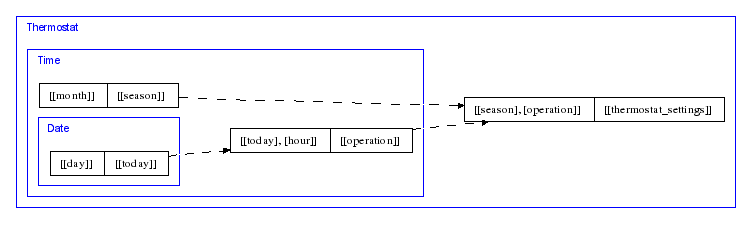

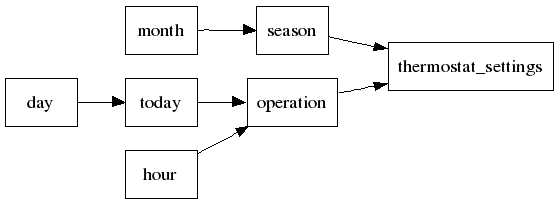

An example of a complete ARD+ design (the lowest level) is shown below.

Based on the ARD, rule prototypes, complying with XTT, can be automatically generated as it is showed below.

XTT+

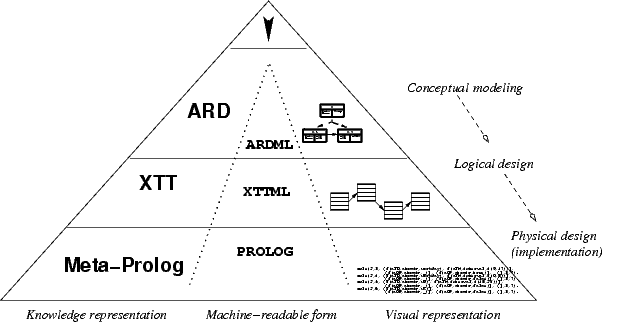

The XTT (EXtended Tabular Trees) knowledge representation, has been proposed in order to solve some common design, analysis and implementation problems present in RBS.

In this method three important representation levels has been addressed:

visual – the model is represented by a hierarchical structure of linked extended decision tables,

logical – tables correspond to sequences of extended decision rules,

implementation – rules are processed using a Prolog representation.

On the visual level the model is composed of extended decision tables.

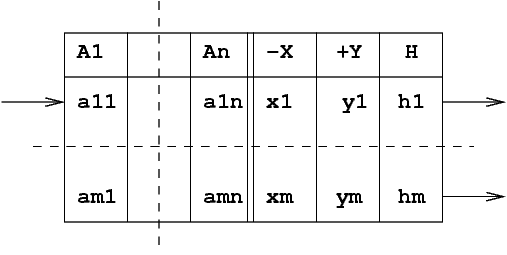

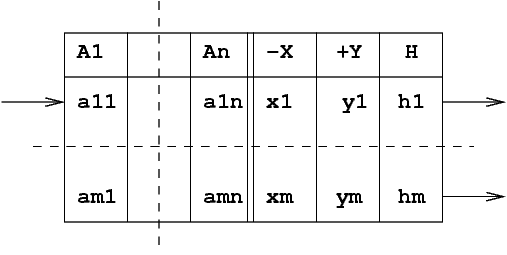

A single table is presented below.

The table represents a set of rules, having the same attributes.

XTT rule includes two main extensions compared to classic RBS:

1) non-atomic attribute values, used both in conditions and decisions,

2) non-monotonic reasoning support, with dynamic assert/retract operations in decision part.

Every table row correspond to a decision rule.

Rows are are interpreted from top row to the bottom one.

Tables can be linked in a graph-like structure.

A link is followed when rule (row) is fired.

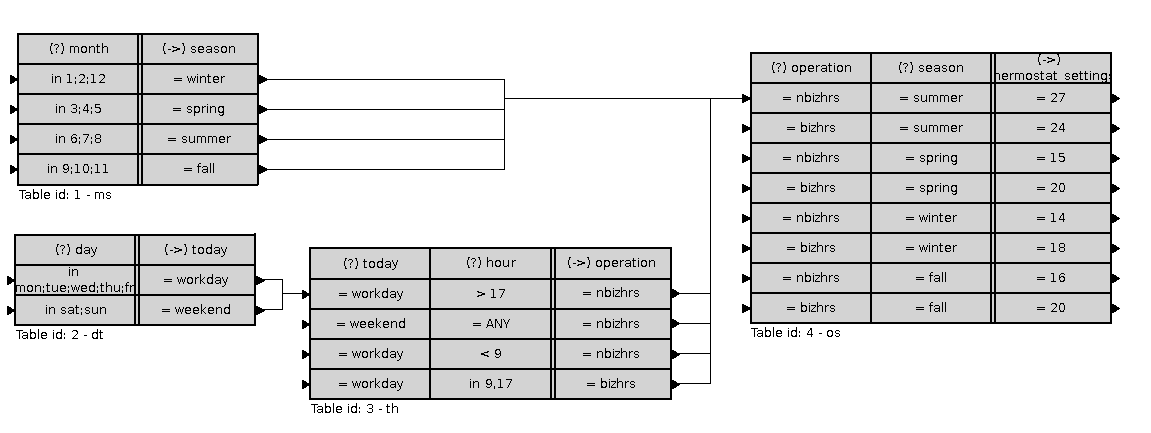

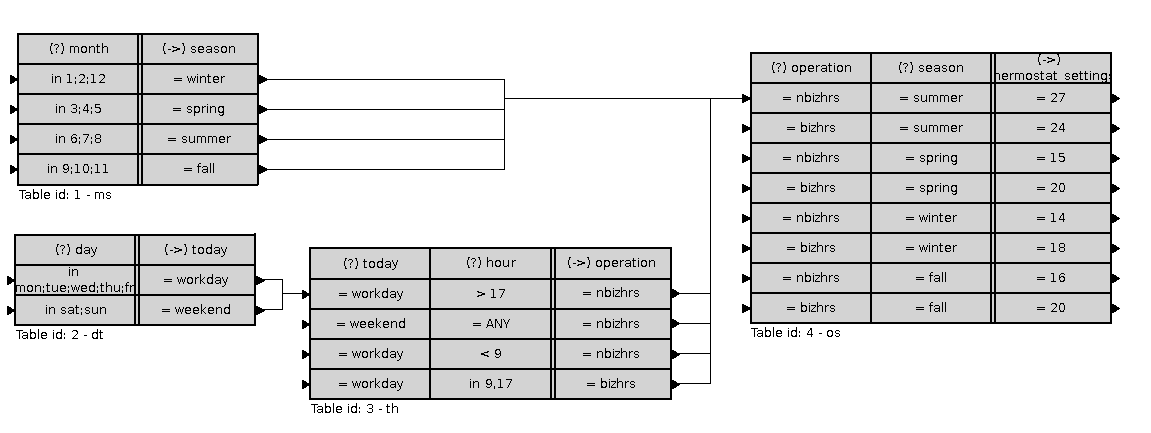

The structure below, corresponds to the ARD+ design presented above.

On the logical level a table corresponds to a number of rules, processed in a sequence.

If a rule is fired and it has a link, the inference engine processes the rule in another table.

The rule is based on an attributive language.

It corresponds to a Horn clause:

Rules are interpreted using a unified knowledge and fact base, that can be dynamically modified during the inference process using Prolog-like assert/retract operators in rule decision part.

Rules are implemented using Prolog-based representation,

using terms, which is a flexible solution

However, it requires a dedicated meta-interpreter.

Currently the base XTT is being extended on the syntax level to provide a better expressiveness towards XTT+, or to be applied for more general cases into GREP, see gjn2007inap

Another area of development includes formalism extensions, such as temporal and fuzzy, see ali2008flairs

Markup

Translation

There are the following scenarios considered regarding knowledge transformation for:

interoperability purposes,

execution purposes,

validation and verification purposes,

visualization purposes.

See

gjn2007cms-knowtrans.

for some general ideas.

Process

Applications

The project aims at applications in the fields of:

Cases

There are several benchmark system design cases considered as the HeKatE testbed.

Thermostat

UServ

Current development tools include:

Development

HeKatE is currently under heavy development.

Members of the team use the development part.

Stay tuned!